Transforming Filmmaking: Meta’s MoCha AI Creates Realistic Talking Video Characters

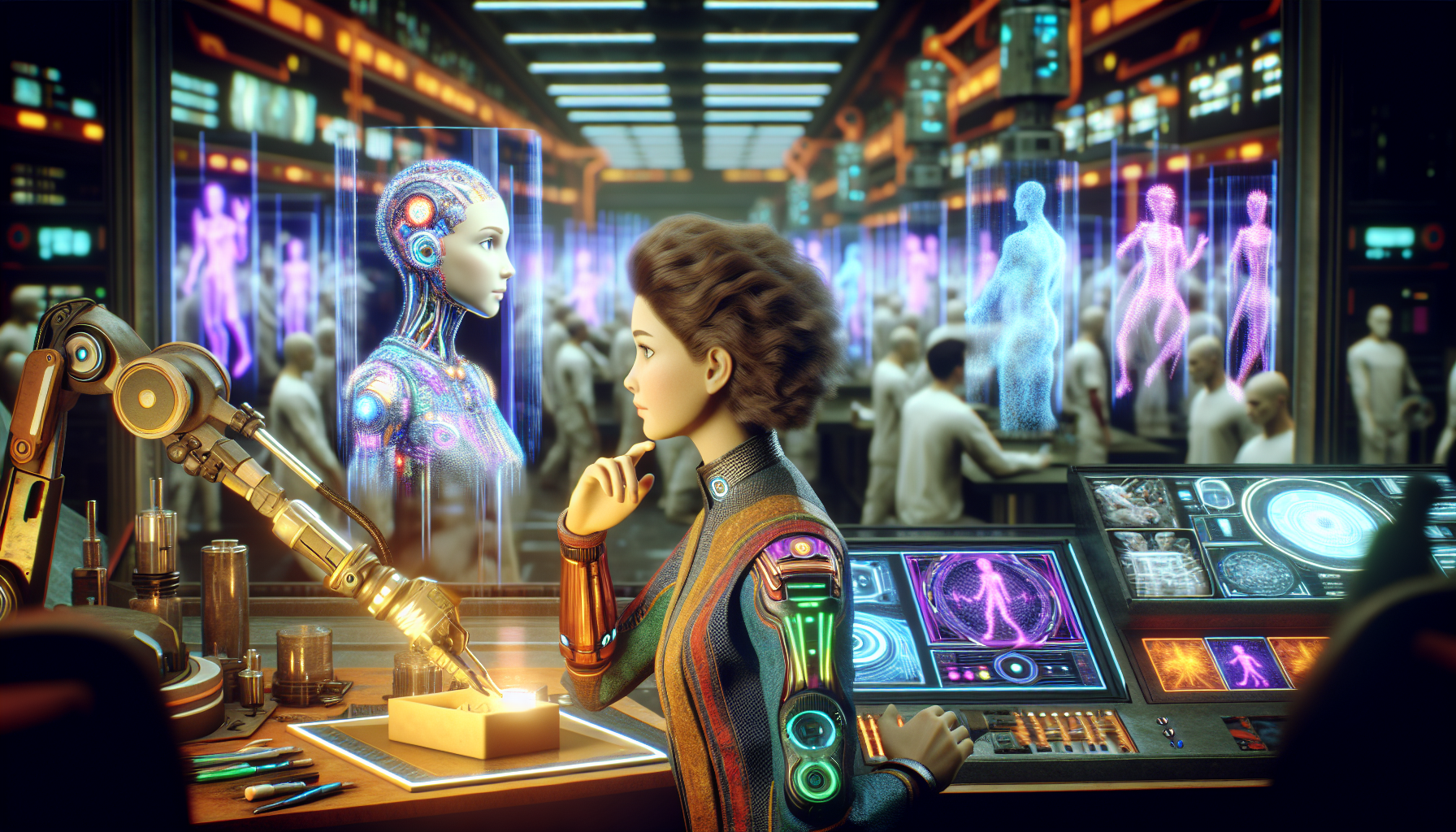

Artificial intelligence is swiftly altering the entertainment landscape, and with Meta’s groundbreaking development, the distinctions between genuine and artificial are becoming increasingly indistinct. Meet MoCha—short for Movie Character Animator—a state-of-the-art AI technology crafted by Meta in partnership with the University of Waterloo. This innovation generates lifelike, speaking video characters that have the potential to revolutionize the production of films, television shows, and digital media.

What Is Meta MoCha?

An Advancement in AI-Generated Video Content

MoCha surpasses a typical AI tool. Unlike standard video editing programs or character animation systems, MoCha operates as a text-to-video AI model that synthesizes visual and vocal expressions within a single scene. Users input a straightforward text description along with an audio clip, and MoCha generates a video that features characters accurately delivering lines while mimicking facial expressions, emotions, and gestures.

Initially conceived as a research initiative, MoCha is currently in its testing stage. Nevertheless, preliminary demonstrations reveal noteworthy outcomes—realistic human characters, fluid lip-syncing, and dynamic facial animations. The AI adeptly combines multiple characters within one scene, presenting itself as a potential revolutionary asset for the entertainment sector.

Understanding How MoCha Functions

Transforming Text and Audio into Realistic Video

At its foundation, MoCha merges natural language processing, computer vision, and speech analysis. The process begins with the user supplying a scene description and an audio sample. The AI then analyzes the audio to align facial expressions, lip movements, and body gestures according to the tone and cadence of the speech.

Meta’s research team trained MoCha on a dataset comprising around 500,000 samples—equivalent to 300 hours of high-caliber speech and video material. Although the precise sources of this data remain undisclosed (a prevalent issue in the AI sector), the extensive scope and quality of the training resources have allowed MoCha to yield remarkably realistic results.

Contrasting MoCha with Other AI Video Solutions

Runway Gen-4 vs. MoCha

Runway’s Gen-4 video model represents another formidable player in the AI video generation realm. It enables users to produce cinematic clips from just a single image and a text prompt. In contrast to MoCha, Runway Gen-4 provides a more refined, consumer-ready offering, with demos showcasing superior scene continuity and visual excellence.

Nonetheless, MoCha excels in its capacity to create animated speaking characters imbued with emotional depth. While it may fall slightly short in visual realism, MoCha’s true strength lies in its character-driven narratives.

Other Competitors: Microsoft’s VASA-1 and ByteDance’s OmniHuman-1

MoCha is not the sole competitor in this field. Microsoft’s VASA-1 and ByteDance’s OmniHuman-1 also transform still images into animated, speaking characters. These technologies replicate facial and even bodily movements with impressive precision.

For example, VASA-1 can animate a still image into a dynamic, speaking video by assessing an audio sample. Likewise, OmniHuman-1 enhances this by incorporating body gestures. These technologies have ignited discussions surrounding ethical ramifications, particularly about deepfakes and misinformation.

Potential Uses in Film and Beyond

Cutting Production Costs

MoCha and similar AI applications have the potential to drastically decrease expenses associated with film and video production. Traditional special effects, voice dubbing, and CGI character animation are often painstaking and costly endeavors. AI-generated content could streamline these tasks, allowing even smaller studios and independent creators to achieve high-quality production.

Virtual Influencers, Marketing, and Learning

Beyond film, MoCha’s functionalities can be applied to virtual influencers, digital advertising, and educational resources. Brands may create realistic spokespersons for their campaigns without the need for actors, while educational platforms could design interactive, animated lessons utilizing AI-generated instructors.

Ethical Considerations and Industry Openness

The Deepfake Challenge

Like any powerful innovation, MoCha’s advancements present ethical dilemmas. The potential to fabricate hyper-realistic videos depicting individuals saying things they never uttered raises concerns about deepfakes and potential abuse.

Researchers and developers must emphasize transparency and safety. This entails revealing the origins of training data, employing watermarking systems, and creating tools to detect AI-generated material.

MoCha and the Future of AI in Entertainment

Although MoCha is not yet available to the public, its development indicates a paradigm shift in how we conceive digital content creation. AI-generated characters, once seen as a concept of the future, are quickly materializing into a practical reality. As MoCha progresses, it could herald a new chapter in storytelling—one where creativity knows no bounds.

Conclusion

Meta’s MoCha exemplifies how artificial intelligence is reshaping the entertainment industry. With its capability to generate realistic, talking characters from simple inputs, MoCha has the potential to democratize video production and transform creative processes. However, this innovation brings with it a sense of responsibility. As the gap between reality and artificial creation continues to narrow, ethical usage, transparency, and accountability must remain paramount in AI development.

Frequently Asked Questions (FAQ)

1. What is Meta MoCha, and how does it function?

Meta MoCha (Movie Character Animator) is an AI-driven text-to-video platform developed by Meta and the University of Waterloo. It creates realistic speaking characters utilizing a text prompt and an audio sample, synchronizing lip movements, facial expressions, and body language to produce lifelike video clips.

2. How does MoCha measure up to other AI video tools?

MoCha stands alongside tools such as Runway Gen-4, Microsoft’s VASA-1, and ByteDance’s OmniHuman-1. While Runway offers more polished visuals, MoCha outperforms in terms of character realism and emotional expression. VASA-1 and OmniHuman-1 also facilitate the creation of speaking characters but primarily focus on animating static images.

3. Can MoCha be utilized in professional filmmaking?

Possibly, yes. While currently a research initiative, MoCha has the potential to greatly reduce time and costs in the film industry. It can be employed for special effects, voice dubbing, and character animation.

4. Are there ethical implications regarding MoCha?

Indeed. Similar to other AI technologies capable of generating realistic human representations, MoCha poses risks of misuse for creating deepfakes or spreading misinformation. Developers must implement protective measures and advocate for responsible use.

5. Is MoCha available to the public?

Currently, MoCha is in the research phase and not accessible for public or commercial use. An official timeline for a public launch has not been provided.

6. What type of data was used to train MoCha?

MoCha was trained on a dataset consisting of roughly 500,000 video samples, which encompass 300 hours of speech and video content. However, Meta has not revealed the specific sources of this data, raising questions about transparency.

7. Is MoCha capable of creating animated characters for advertising or education?

Yes. MoCha’s proficiency in generating lifelike speaking characters renders it suitable for applications in advertising, educational videos, and even virtual influencers, broadening its scope beyond filmmaking.